Understanding tokens and prompts is key for optimal LLMOps

Demystifying Tokens in LLMs

At tokes compare, we get asked how to present on LLMs and generative AI to different audiences; this is what our team developed for a group of teenagers (using ChatGPT, Bard and Midjourney) to inspire creative writing. We would love to hear your stories about how you tailor your messages.

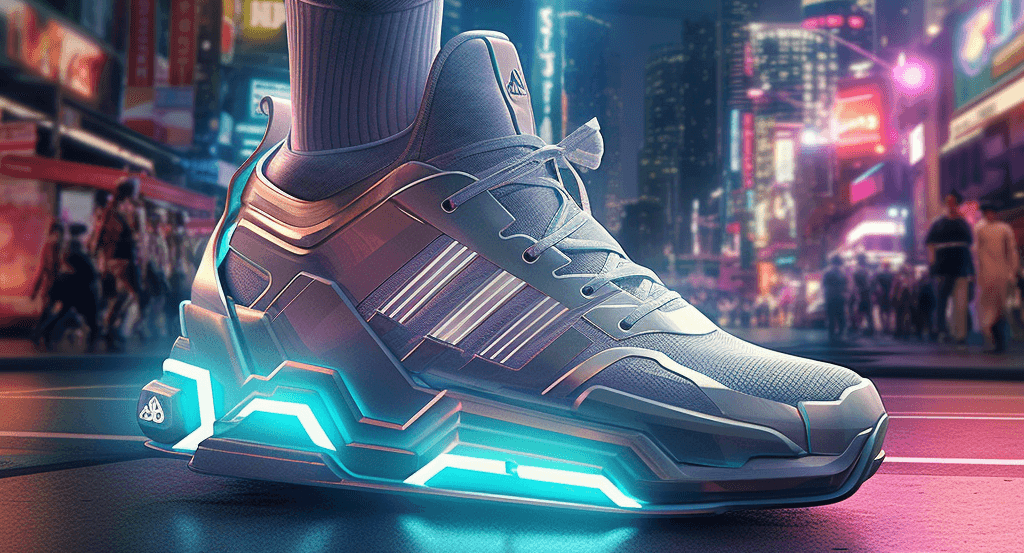

Once upon a time, in the bustling city of AI-Town, there existed an extraordinary company named OpenAI-das. This innovative company held a vision: to create the most advanced and comfortable "trainers" the world had ever seen. But these weren't ordinary trainers; they were AI trainers designed to help people communicate, solve problems, and improve the world.

OpenAI-das had a long history of creating fantastic AI trainer models, known as the GPT series. Each model was like a unique pair of trainers, tailored to specific needs and desires, with an ever-evolving style and functionality. People from all over the world flocked to AI-Town to see the latest releases and marvel at the brilliance of these AI trainers, and they wanted to learn more about how they were made and the steps involved (do you get the pun?).

As the GPT series evolved, OpenAI-das knew it was time to create something truly ground-breaking. They set out to develop a new kind of AI trainer, the Adidas GPT-5, which would revolutionize the world of AI trainers. The Adidas GPT-5 was a breathtaking combination of various components, each serving a specific purpose. This included:

1

The Sole

(LLM Architectural Design)

The process began with selecting the right model architecture, in this case, the Transformer architecture (they had considered using the new Receptance Weighted Key Value architecture, but decided on a tried and tested model). The Transformer architecture, much like the sole of a trainer, provided the foundation for the LLM. It allowed the model to process and understand vast amounts of data dynamically and efficiently.

2

The Trainer Body

(Large-Scale Pre-training)

After setting the architecture, the designers initiated the large-scale pre-training process, akin to the body of the trainer that envelops and protects the foot. Using vast amounts of text and data (e.g., books, websites, etc), GPT-5 learned the intricacies of language and context. This process enabled GPT-5 to generate highly accurate and coherent responses.

3

The Laces

(Fine-Tuning)

This step enabled GPT-5 to be adapted to specific tasks and industries. Just as laces ensure a perfect fit for the wearer, fine-tuning ensures that the LLM meets the unique needs and requirements of each specific application.

4

The Stitching

(Self-Attention Mechanism)

The attention mechanism in GPT-5, symbolized by the stitching of the trainer, allowed the model to focus on relevant parts of the input data while ignoring unnecessary information. This feature ensured GPT-5 provided contextually accurate responses and generated meaningful text.

5

The Insole

(Tokenization)

Just as an insole allows for comfortable strides, the tokenization process in GPT-5 breaks down the text into smaller, manageable pieces. This process makes it easier for the model to understand and process language.

6

The Testing Phase

(Reinforcement Learning from Human Feedback)

The creators of GPT-5 tested the model using Reinforcement Learning from Human Feedback (RLHF) techniques. This process involved generating outputs, having human evaluators rank these outputs, and then optimizing the model to align with these preferences. This step ensured that GPT-5 exhibited enhanced context understanding and generated coherent, accurate, and contextually relevant responses.

7

The Trainer Design

(User Interface Design)

Finally, the interface of GPT-5, much like the stylish design and colors of the trainer, was designed to be sleek, intuitive, and user-friendly, boasting new exciting features. This design consideration ensured that the model was not only high-performing but also visually appealing and easy to use.

As Adidas GPT-5 was unveiled to the world, the people of AI-Town rejoiced. They also wanted to ensure that the cost was reasonable so that everyone could have access to it. They could see how AI trainers could improve their everyday lives. As the popularity of the trainer grew, people started to share their stories and the designers at OpenAI-das and other trainer companies learned about how to make trainers even better and safer for future generations to use.