The LLM Evolutionary Tree

The Adidas GPT-5 chronicles

What are LLMOps?

You will increasingly encounter the term 'LLMOps', short for Large Language Model Operations, across your social media platforms and within your professional circles.

LLMOps encompass the optimal management of the entire lifecycle of applications powered by Large Language Models (LLMs), from their creation to deployment and continuous upkeep. It's essential for developers and businesses interested in launching LLM-enabled applications to understand the operational necessities, infrastructure requirements, and associated costs.

At tokes compare, the world's first dedicated LLM and generative AI comparison site, we are seeing a massive spike in interest from developers and enterprises as they scramble to think about how they can make the most out of the AI revolution to automate and improve applications and services, but keep their costs to a minimum.

When choosing a model, you are likely to consider accuracy, speed, and cost. Additionally, you may evaluate the model's interpretability (the model's understanding of decision-making), bias (inherent tendencies), scalability (performance as data increases), maintenance (ease of updates or fine-tuning), generalizability (performance on unseen data), regulatory compliance, privacy and security, and integration (compatibility with existing tech stack). However, in this blog, we focus on tokens and how this can translate to costs.

Let's talk tokens

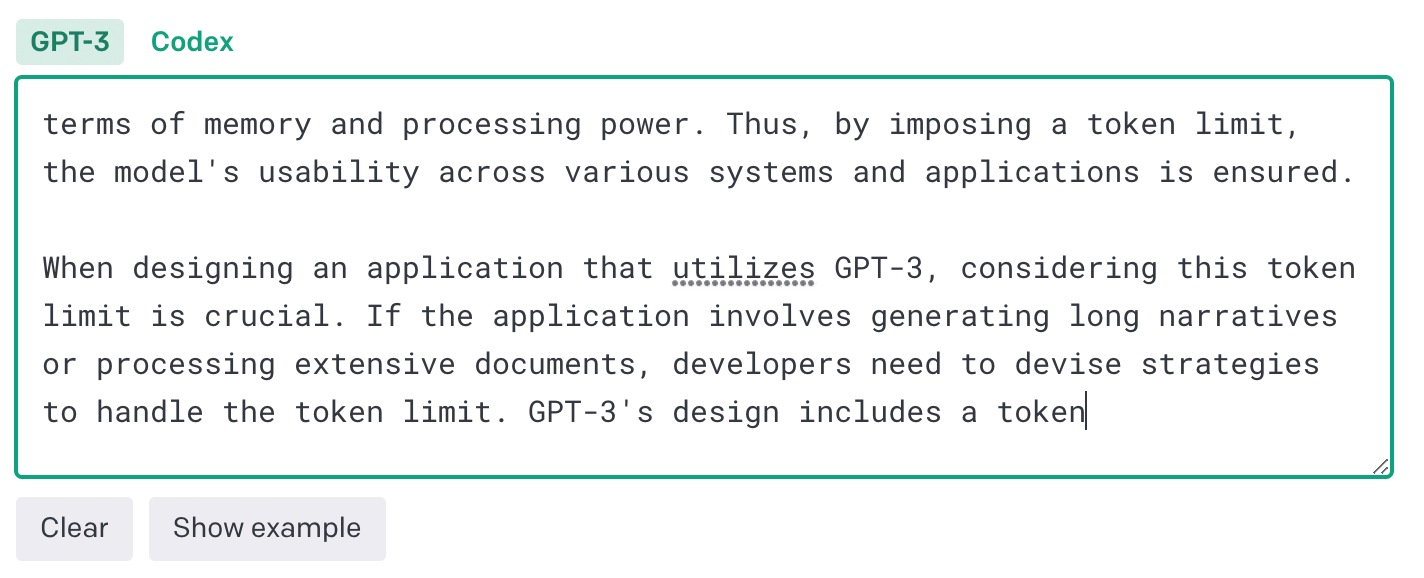

The concept of tokenization is central to the functioning of LLMs. The tokenization process is largely determined by the model's design and training. It involves breaking down the input text into smaller, manageable pieces—tokens—that the model can understand and process. In the context of LLMs, a token can be word, subword, character, or byte (see below an example of tokenization based on OpenAI's GPT-3 model).

Many LLM services accessed through an API, including language models like GPT-4, Jurassic-2 or PaLM 2, are often billed based on the number of tokens processed. The more tokens an application uses, the higher the cost (think of it as an LLM pay-as-you-go consumption model). Therefore, managing the number of tokens is essential for cost control. Interestingly, we are seeing LLM providers starting to advertise that they offer more text per token than other providers, which aims to save consumers money.

Below is an example based on OpenAI's GPT-3 model (you can access their free Tokenizer here) and it shows that while there were 4,801 characters, this was grouped into 1,000 tokens.

tokens

characters

In the example, we have used enough characters to get to 1,000 tokens, as this is the industry standard for most LLMs (1/k tokens). Let's look at how this applies to some of the models for companies mentioned earlier:

1

Model selection

Choose the right LLM based on the output you want. Models have different architectures and capabilities and excel in different fields. Use the tokes compare website to select the right model to power your application, and contact the tokes compare team if you need further guidance.

2

Utilize cost estimation tools

Employ our tokes compare calculator and model-specific cost data to understand token expenses for application development and maintenance. We have heard from consumers find it challenging to grasp the 1/k currency due to the decimalization of prices, so we would recommend developers / managers / finance professionals calculate the number of tokens first (which annually for enterprises may be in the hundreds of millions or billions). You should then compare and contrast similar models token prices to see your monthly or annual potential savings.

3

Craft efficient prompts and ensure you have concise conversations

Reduce token costs by creating clear, concise prompts (user inputs) and consider fine-tuning the model for shorter prompts (prompt design and engineering is a great skill to learn). Maintain focus in dialogue to limit token usage and manage costs by consolidating queries for increased efficiency.

4

Context management

Eliminate redundant context messages to reduce prior context size and prompt costs.

5

Context reset

Start fresh conversations to lower overall token usage and costs.

6

Leverage vector stores

Use vector stores (a database designed to store and retrieve vectors) to "cache" LLMs, reducing the frequency of API calls and the amount of context passed to the model. Five popular vector databases include Faiss (Meta), Pinecone, Milvus, Postgres (pg_vector), and Weaviate.

7

Handle long documents

Develop strategies to deal with documents exceeding the maximum context limit (the maximum number of tokens the model can process at one time). You can segment the document into smaller parts, use a sliding window approach (where you move through the document in overlapping sections, ensuring continuity of context), and employ abstractive summarization (or a combination of these strategies).

8

Regulate completion length

Set a maximum token limit for model responses (completion) to prevent overly lengthy replies, balancing between cost and information quality.

9

Apply wait policies

Implement wait policies to pause response generation until token additions cease, saving unnecessary token usage.

10

Custom model training

Consider developing a bespoke model if these strategies do not sufficiently reduce costs.